How we built an autonomous AI performance engineer

After months of development, we're excited to finally introduce dba - the superhuman engineer designed for end-to-end PostgreSQL database optimization. Let's walk through our journey building dba, the technical challenges we faced, and how we tackled them.

The Opportunity

Managing databases at scale is no small feat. Postgres is robust, but it's also a labyrinth of layers that demand constant attention. Performance optimization, query tuning, monitoring, and troubleshooting can consume a developer's entire week. Even seasoned DBAs can get trapped in a cycle of repetitive performance issues that pull their focus away from more fruitful work.

We saw the gap between passive database monitoring and active performance optimization. We knew that the solution had to be autonomous, specialized and better than a parrot-like LLM. That's what inspired dba: the AI agent that leverages deep Postgres knowledge to analyze and improve your specific database environment.

Technical Architecture

dba is built on a modern stack that includes:

-

Rust Backend: Our API server is written in Rust for performance, reliability, and type safety. It handles connections to Postgres instances, processes telemetry, and manages the AI interaction pipeline.

-

Next.js Frontend: Our UI is built with Next.js, React, and TailwindCSS. The result is an interface that feels intuitive and responsive.

-

Knowledge Base: We've integrated multiple sources of Postgres expertise:

- Indexed Postgres documentation

- Mailing list archives and summaries

- Planet Postgres articles

- Common troubleshooting patterns

-

LLM Integration: We use a combination of retrieval-augmented generation techniques to provide accurate, context-aware responses.

Wanna see it?

Connection Management

Securing and managing database connections was a critical part of ensuring dba worked seamlessly—especially at scale. We built a system that supports multiple connection methods, from direct connection strings to cloud services like AWS RDS, Tembo Cloud, and Supabase. To make sure connections don't become a bottleneck, we incorporated connection pooling using tools like pgpool-II and pgbouncer. We also made sure tenant data stays locked down and isolated through Postgres RLS.

We talked to roughly sixty developers to make sure we were tailoring dba for modern software engineer. Unsurprisingly, a common hesitation was security. We configured read-only access options, giving roles strict, controlled access to prevent accidental changes. It's a streamlined, secure approach to ensure your database won't break.

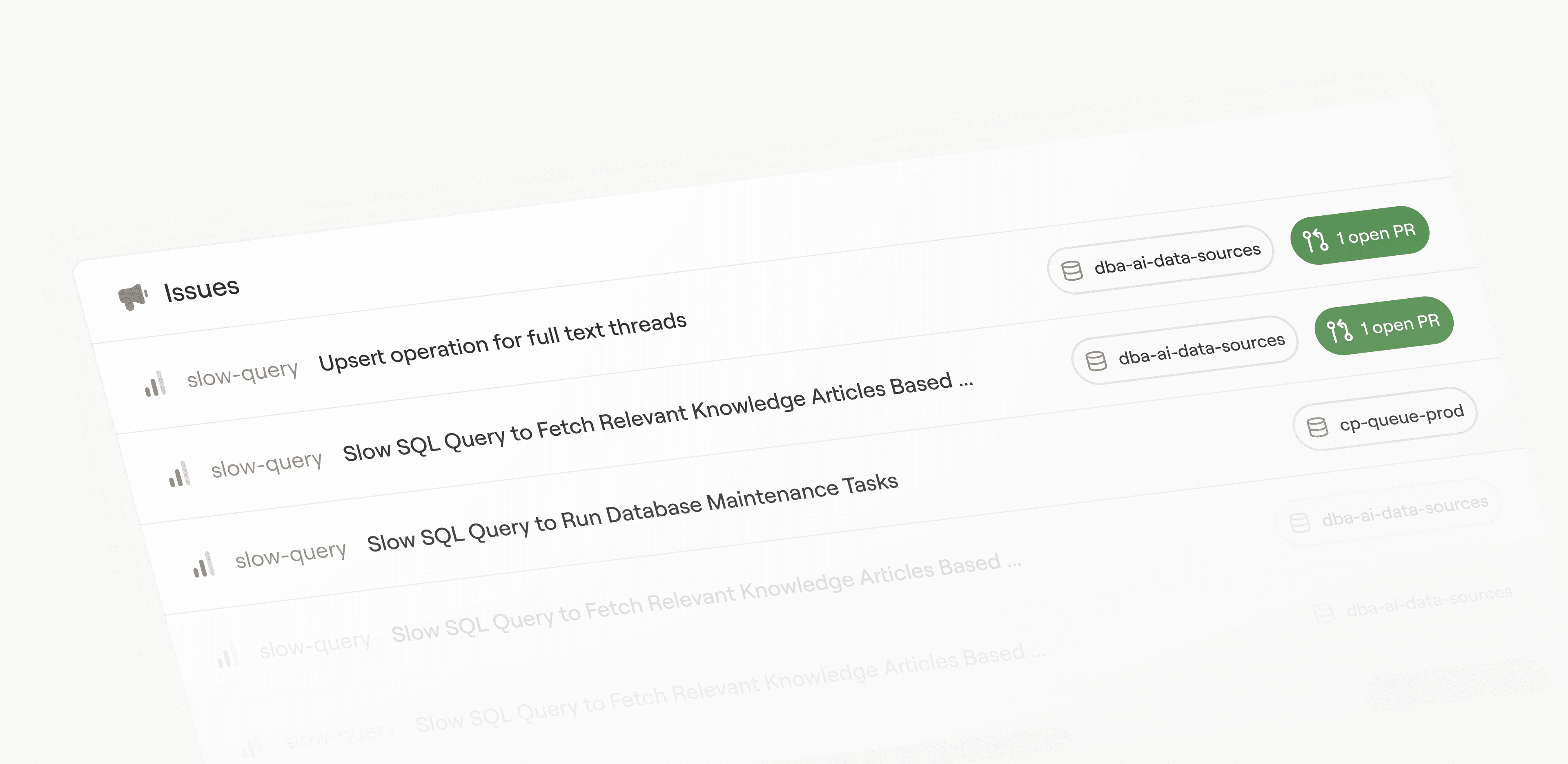

Intelligent Issue Detection

We didn't just want dba to react to problems. We wanted it to anticipate them. dba proactively detects issues with:

-

Metrics Analysis: dba automatically collects and analyzes your Postgres metrics, processing them in real-time to flag any performance issues.

-

Pattern Recognition: dba detects common issues like slow queries, resource bottlenecks, or unusual connection patterns. If it notices a potential Postgres alert, it tells you.

-

Issue Prioritization: We quickly realized that dba shouldn't just dump a list of problems without classification. Now, it ranks issues based on severity and impact to prioritize critical alerts.

Building the Knowledge Pipeline

For dba to be useful, it needed a rock-solid knowledge base. We created a pipeline that processes, indexes, and feeds relevant data into a comprehensive Postgres knowledge base. Official documentation with proper context preservation was the first key input in our repository, followed by community discussions from mailing lists (which required customer parsing), and blog posts from Planet Postgres.

Of course, we also have the advantage of in-house Postgres experts to train our LLM. Because of its foundation, dba has the ability to provide seasoned advice and take action on Postgres alerts.

Challenges We Faced

Building dba meant failing fast and learning through iteration. Below we detail the most important lessons we’ve learned thus far.

Context Management

When dba is investigating a Postgres database, context is king. We built efficient context retrieval systems that could pull in the right data when needed. We also threw in conversation memory and summarization, because most developers don't have time to keep reminding the system what's going on. Expecting one-size-fits-all solution was naïve, so we adapted to create specialized tools for different types of database analysis.

Safety and Accuracy

We quickly learned how to prevent dba from making a suggestion that could tank your production database. We used sample databases with known issues to ensure dba wouldn't negatively impact different workloads. We also implemented safety classifications for suggestions. Now, dba can clearly tell the difference between "Hey, here's some read-only info" and "Hey, you might want to back up before trying this." And, before any change go live, dba asks for your confirmation.

Real-time Query Analysis

Attacking slow queries meant that we needed to process a lot of data, fast. So, we built specialized tools to extract and parse EXPLAIN plans without slowing things down. We also compared query patterns to spot potential optimizations. Enabling dba to suggest viable solutions to optimize performance based on valuable analytics was fundamental.

What's Next

We’re already working on:

- Expanded connections: More cloud providers and simplified self-hosted setup

- Advanced monitoring: Deeper insights into database health and performance

- Customization: Tailored recommendations based on your specific workload patterns

- Integration ecosystem: Connecting with existing DevOps and monitoring tools

Get Started

dba is now in alpha testing, and we are letting select users in every week.

We're excited to hear your feedback, as we continue improving dba.